In my line of work, it is easy to get away with not being au fait with technology. Having previously laughed off my ineptitude saying ‘there’s a reason I work with people not machines!’ I never considered I had a place in the realm of all things IT and computers. Until I discovered AI. More specifically, Deep Neural Networks. With a specialism in Neuropsychology, I was intrigued by a discipline that aimed to recreate that which we barely understand; the human brain. One of my favourite (and most frustrating) realisations when I began studying more than twenty years ago, was that I could dedicate myself to learning about the human brain 24/7 for the rest of my life… and still barely scratch the surface. Yet here was an AI community telling me they could recreate it. I was beyond intrigued and so began my unexpected delve into the world of all things machine learning and I haven’t looked back.

In my line of work, it is easy to get away with not being au fait with technology. Having previously laughed off my ineptitude saying ‘there’s a reason I work with people not machines!’ I never considered I had a place in the realm of all things IT and computers. Until I discovered AI. More specifically, Deep Neural Networks. With a specialism in Neuropsychology, I was intrigued by a discipline that aimed to recreate that which we barely understand; the human brain. One of my favourite (and most frustrating) realisations when I began studying more than twenty years ago, was that I could dedicate myself to learning about the human brain 24/7 for the rest of my life… and still barely scratch the surface. Yet here was an AI community telling me they could recreate it. I was beyond intrigued and so began my unexpected delve into the world of all things machine learning and I haven’t looked back.

As a health and wellbeing consultant, I have spent the last decade supporting businesses to be mindful of ethical, cultural and medicolegal considerations in everything they do. It has been a natural progression to expand to encompass the world of AI as the technology pervades. The rapid development of user-friendly interfaces means systems are accessible to those without a technical and/ or coding background. Even if you have no interest in AI, you will still engage with and use AI multiple times as you go about your day. It is unavoidable. It is ubiquitous. It is in your business.

Presenting at a recent conference, I was asked ‘Half my company won’t go near the tools I’ve brought in, how can I get these employees using the technology when they refuse to engage with it?’. It was an interesting question and not the first time I’ve been asked it. Anyone who has benefited from having an onerous task completed in seconds by Chat GPT will extol the wonders. Need a job description written for a new role? Done. Need to write a letter of complaint? Sorted. Need to cheat on an exam? Less admirable, but definitely doable.

“We can get so excited at the capability of AI that it’s tempting to ride roughshod over employees who are less than enthused”

We can get so excited at the capability of AI that it’s tempting to ride roughshod over employees who are less than enthused, building AI into our businesses at every level. So back to the question. How do we engage those who are less than willing to explore AI? For a start, we need to stop labelling them as luddites. Those employees who are cautious of AI are not dinosaurs who fear change; they are mindful of ethical pitfalls and we should invite their views to the table.

When it comes to ethics, AI does not have an unblemished history. Published incidences of bias and racism within large AI systems have led to an understandable level of cautiousness and reticence amongst employees. For those employees who don’t feel represented within the AI developer community, AI does not offer a wonderful and exciting opportunity. Instead, it poses an overwhelming threat to discriminate faster than ever and without human oversight or correction. It isn’t exciting to know that the oppression and challenges individuals have been fighting for generations are now built into machines making decisions on everything from college applications to financial decisions; it’s terrifying.

We know that bias exists within AI systems. Cade Metz wrote in the New York Times that there have been two constants throughout the decade he has been writing about AI. Firstly, the continuing and astonishing bursts of development and secondly the bias that is woven throughout yet never fully acknowledged or dealt with. There is a seeming acceptance of bias within AI, with companies so excited about potential and so incredulous at what machines can do, there is no pause for consideration of harm. To put it another way, a recent interview with an eminent AI developer saw him shrug and say ‘ethics are only a problem if you care about ethics.’

Rather than wonder how to force reticent employees to engage with AI, we should be asking what we can learn from their hesitancy? AI has the potential to further separate an already disparate workforce but we can unite when we make space for every voice at the table. If we can embrace AI together with a high standard of ethical scrutiny and a zero-tolerance approach to bias, then we create truly exciting opportunities for all our employees and hold AI to account. When we include all voices, our demands for ethical AI are louder and our business integrity is stronger. That’s how to get all employees on board.

Dr Stephanie Fitzgerald is an experienced Clinical Psychologist and Health and Wellbeing Consultant. Stephanie is passionate about workplace wellbeing and strongly believes everyone can and should be happy at work. Stephanie supports companies across all sectors to keep their employees happy, healthy, safe and engaged. Follow her on Instagram @workplace_wellbeing

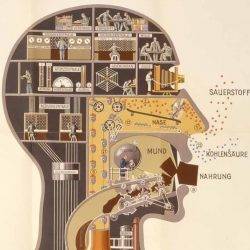

Main image: Fritz Kahn’s Der Mensch Als Industriepalast

May 29, 2024

Not luddite dinosaurs but the sensible voice of caution on AI. And you need to listen

by Stephanie Fitzgerald • AI, Comment, SF, Technology

As a health and wellbeing consultant, I have spent the last decade supporting businesses to be mindful of ethical, cultural and medicolegal considerations in everything they do. It has been a natural progression to expand to encompass the world of AI as the technology pervades. The rapid development of user-friendly interfaces means systems are accessible to those without a technical and/ or coding background. Even if you have no interest in AI, you will still engage with and use AI multiple times as you go about your day. It is unavoidable. It is ubiquitous. It is in your business.

Presenting at a recent conference, I was asked ‘Half my company won’t go near the tools I’ve brought in, how can I get these employees using the technology when they refuse to engage with it?’. It was an interesting question and not the first time I’ve been asked it. Anyone who has benefited from having an onerous task completed in seconds by Chat GPT will extol the wonders. Need a job description written for a new role? Done. Need to write a letter of complaint? Sorted. Need to cheat on an exam? Less admirable, but definitely doable.

We can get so excited at the capability of AI that it’s tempting to ride roughshod over employees who are less than enthused, building AI into our businesses at every level. So back to the question. How do we engage those who are less than willing to explore AI? For a start, we need to stop labelling them as luddites. Those employees who are cautious of AI are not dinosaurs who fear change; they are mindful of ethical pitfalls and we should invite their views to the table.

When it comes to ethics, AI does not have an unblemished history. Published incidences of bias and racism within large AI systems have led to an understandable level of cautiousness and reticence amongst employees. For those employees who don’t feel represented within the AI developer community, AI does not offer a wonderful and exciting opportunity. Instead, it poses an overwhelming threat to discriminate faster than ever and without human oversight or correction. It isn’t exciting to know that the oppression and challenges individuals have been fighting for generations are now built into machines making decisions on everything from college applications to financial decisions; it’s terrifying.

We know that bias exists within AI systems. Cade Metz wrote in the New York Times that there have been two constants throughout the decade he has been writing about AI. Firstly, the continuing and astonishing bursts of development and secondly the bias that is woven throughout yet never fully acknowledged or dealt with. There is a seeming acceptance of bias within AI, with companies so excited about potential and so incredulous at what machines can do, there is no pause for consideration of harm. To put it another way, a recent interview with an eminent AI developer saw him shrug and say ‘ethics are only a problem if you care about ethics.’

Rather than wonder how to force reticent employees to engage with AI, we should be asking what we can learn from their hesitancy? AI has the potential to further separate an already disparate workforce but we can unite when we make space for every voice at the table. If we can embrace AI together with a high standard of ethical scrutiny and a zero-tolerance approach to bias, then we create truly exciting opportunities for all our employees and hold AI to account. When we include all voices, our demands for ethical AI are louder and our business integrity is stronger. That’s how to get all employees on board.

Dr Stephanie Fitzgerald is an experienced Clinical Psychologist and Health and Wellbeing Consultant. Stephanie is passionate about workplace wellbeing and strongly believes everyone can and should be happy at work. Stephanie supports companies across all sectors to keep their employees happy, healthy, safe and engaged. Follow her on Instagram @workplace_wellbeing

Main image: Fritz Kahn’s Der Mensch Als Industriepalast